Active pixel sensor

An active-pixel sensor (APS) is an image sensor consisting of an integrated circuit containing an array of pixel sensors, each pixel containing a photodetector and an active amplifier. There are many types of active pixel sensors including the CMOS APS used most commonly in cell phone cameras, web cameras and in some DSLRs. Such an image sensor is produced by a CMOS process (and is hence also known as a CMOS sensor), and has emerged as an alternative to charge-coupled device (CCD) image sensors.

The term active pixel sensor is also used to refer to the individual pixel sensor itself, as opposed to the image sensor;[1] in that case the image sensor is sometimes called an active pixel sensor imager,[2] active-pixel image sensor,[3] or active-pixel-sensor (APS) imager.

Contents |

History

The term active pixel sensor was coined by Tsutomu Nakamura who worked on the Charge Modulation Device active pixel sensor at Olympus,[4] and more broadly defined by Eric Fossum in a 1993 paper.[5]

Image sensor elements with in-pixel amplifiers were described by Noble in 1968,[6] by Chamberlain in 1969,[7] and by Weimer et al. in 1969,[8] at a time when passive-pixel sensors – that is, pixel sensors without their own amplifiers – were being investigated as a solid-state alternative to vacuum-tube imaging devices. The MOS passive-pixel sensor used just a simple switch in the pixel to read out the photodiode integrated charge.[9] Pixels were arrayed in a two-dimensional structure, with access enable wire shared by pixels in the same row, and output wire shared by column. At the end of each column was an amplifier. Passive-pixel sensors suffered from many limitations, such as high noise, slow readout, and lack of scalability. The addition of an amplifier to each pixel addressed these problems, and resulted in the creation of the active-pixel sensor. Noble in 1968 and Chamberlain in 1969 created sensor arrays with active MOS readout amplifiers per pixel, in essentially the modern three-transistor configuration. The CCD was invented in 1970 at Bell Labs. Because the MOS process was so variable and MOS transistors had characteristics that changed over time (Vt instability), the CCD's charge-domain operation was more manufacturable and quickly eclipsed MOS passive and active pixel sensors. A low-resolution "mostly digital" N-channel MOSFET imager with intra-pixel amplification, for an optical mouse application, was demonstrated in 1981.[10]

Another type of active pixel sensor is the hybrid infrared focal plane array (IRFPA) designed to operate at cryogenic temperatures in the infrared spectrum. The devices are two chips that are put together like a sandwich: one chip contains detector elements made in InGaAs or HgCdTe, and the other chip is typically made of silicon and is used to readout the photodetectors. The exact date of origin of these devices is classified, but by the mid-1980s they were in widespread use.

By the late 1980s and early 1990s, the CMOS process was well established as a well controlled stable process and was the baseline process for almost all logic and microprocessors. There was a resurgence in the use of passive-pixel sensors for low-end imaging applications,[11] and active-pixel sensors for low-resolution high-function applications such as retina simulation[12] and high energy particle detector.[13] However, CCDs continued to have much lower temporal noise and fixed-pattern noise and were the dominant technology for consumer applications such as camcorders as well as for broadcast cameras, where they were displacing video camera tubes.

Eric Fossum, et al., invented the image sensor that used intra-pixel charge transfer along with an in-pixel amplifier to achieve true correlated double sampling (CDS) and low temporal noise operation, and on-chip circuits for fixed-pattern noise reduction, and published the first extensive article[5] predicting the emergence of APS imagers as the commercial successor of CCDs. Between 1993 and 1995, the Jet Propulsion Laboratory developed a number of prototype devices, which validated the key features of the technology. Though primitive, these devices demonstrated good image performance with high readout speed and low power consumption.

In 1995, personnel from JPL founded Photobit Corp., who continued to develop and commercialize APS technology for a number of applications, such as web cams, high speed and motion capture cameras, digital radiography, endoscopy (pill) cameras, DSLRs and of course, camera-phones. Many other small image sensor companies also sprang to life shortly thereafter due to the accessibility of the CMOS process and all quickly adopted the active pixel sensor approach.

Comparison to CCDs

APS pixels solve the speed and scalability issues of the passive-pixel sensor. They generally consume less power than CCDs, have less image lag, and require less specialized manufacturing facilities. Unlike CCDs, APS sensors can combine the image sensor function and image processing functions within the same integrated circuit. APS sensors have found markets in many consumer applications, especially camera phones. They have also been used in other fields including digital radiography, military ultra high speed image acquisition, security cameras and optical mice. Manufacturers include Aptina Imaging (independent spinout from Micron Technology, who purchased Photobit in 2001), Canon, Samsung, STMicroelectronics, Toshiba, OmniVision Technologies, Sony, and Foveon, among others. CMOS-type APS sensors are typically suited to applications in which packaging, power management, and on-chip processing are important. CMOS type sensors are widely used, from high-end digital photography down to mobile-phone cameras.

Advantages of CMOS compared to CCD

The biggest advantage of a CMOS sensor is it typically less expensive than a CCD sensor. A CMOS camera is immune to the blooming effect where a light source has overloaded the sensitivity of the sensor, causing the sensor to bleed the light source onto other pixels.

Disadvantages of CMOS compared to CCD

Since a CMOS video sensor typically captures a row at time within approximately 1/60th or 1/50th of a second (depending on refresh rate) it may cause an image to skew (tilt to the left or right, depending on the direction of camera or subject movement). For example, when tracking a car moving at high speed, the car will not be distorted but the background will be tilted. A frame-transfer CCD sensor does not have this problem, instead capturing the entire image at once into a frame store.

Architecture

Pixel

The standard CMOS APS pixel today consists of a photodetector (a pinned photodiode), a floating diffusion, a transfer gate, reset gate, selection gate and source-follower readout transistor—the so-called 4T cell. The pinned photodiode was originally used in interline transfer CCDs due to its low dark current and good blue response, and when coupled with the transfer gate, allows complete charge transfer from the pinned photo diode to the floating diffusion (which is further connected to the gate of the read-out transistor) eliminating lag. The use of intrapixel charge transfer can offer lower noise by enabling the use of correlated double sampling (CDS). The Noble 3T pixel is still often used since the fabrication requirements are easier. The 3T pixel comprises the same elements as the 4T pixel except the transfer gate and the pinned photo diode. The reset transistor, Mrst, acts as a switch to reset the floating diffusion which acts in this case as the photo diode. When the reset transistor is turned on, the photodiode is effectively connected to the power supply, VRST, clearing all integrated charge. Since the reset transistor is n-type, the pixel operates in soft reset. The read-out transistor, Msf, acts as a buffer (specifically, a source follower), an amplifier which allows the pixel voltage to be observed without removing the accumulated charge. Its power supply, VDD, is typically tied to the power supply of the reset transistor. The select transistor, Msel, allows a single row of the pixel array to be read by the read-out electronics. Other innovations of the pixels such as 5T and 6T pixels also exist. By adding extra transistors, functions such as global shutter, as opposed to the more common rolling shutter, are possible. In order to increase the pixel densities, shared-row, four-ways and eight-ways shared read out, and other architectures can be employed. A variant of the 3T active pixel is the Foveon X3 sensor invented by Dick Merrill. In this device, three photodiodes are stacked on top of each other using planar fabrication techniques, each photodiode having its own 3T circuit. Each successive layer acts as a filter for the layer below it shifting the spectrum of absorbed light in successive layers. By deconvolving the response of each layered detector, red, green, and blue signals can be reconstructed.

APS using TFTs

For applications such as large area digital x-ray imaging thin-film transistors (TFTs) can also be used in APS architecture. However, because of the larger size and lower transconductance gain of TFTs compared to CMOS transistors, it is necessary to have fewer on-pixel TFTs to maintain image resolution and quality at an acceptable level. A two-transistor APS/PPS architecture has been shown to be promising for APS using amorphous silicon TFTs. In the two-transistor APS architecture on the right, TAMP is used as a switched-amplifer integrating functions of both Msf and Msel in the three-transistor APS. This results in reduced transistor counts per pixel, as well as increased pixel transconductance gain.[14] Here, Cpix is the pixel storage capacitance, and it is also used to capacitively couple the addressing pulse of the "Read" to the gate of TAMP for ON-OFF switching. Such pixel readout circuits work best with low capacitance photoconductor detectors such as amorphous selenium.

Array

A typical two-dimensional array of pixels is organized into rows and columns. Pixels in a given row share reset lines, so that a whole row is reset at a time. The row select lines of each pixel in a row are tied together as well. The outputs of each pixel in any given column are tied together. Since only one row is selected at a given time, no competition for the output line occurs. Further amplifier circuitry is typically on a column basis.

Size

The size of the pixel sensor is often given in height and width, but also in the optical format.

Design variants

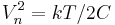

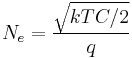

Many different pixel designs have been proposed and fabricated. The standard pixel is the most common because it uses the fewest wires and the fewest, most tightly-packed transistors possible for an active pixel. It is important that the active circuitry in a pixel take up as little space as possible to allow more room for the photodetector. High transistor count hurts fill factor, that is, the percentage of the pixel area that is sensitive to light. Pixel size can be traded for desirable qualities such as noise reduction or reduced image lag. Noise is a measure of the accuracy with which the incident light can be measured. Lag occurs when traces of a previous frame remain in future frames, i.e. the pixel is not fully reset. The voltage noise variance in a soft-reset (gate-voltage regulated) pixel is  , but image lag and fixed pattern noise may be problematic. In rms electrons, the noise is

, but image lag and fixed pattern noise may be problematic. In rms electrons, the noise is  .

.

Hard reset

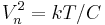

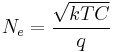

Operating the pixel via hard reset results in a Johnson–Nyquist noise on the photodiode of  or

or  , but prevents image lag, sometimes a desirable tradeoff. One way to use hard reset is replace Mrst with a p-type transistor and invert the polarity of the RST signal. The presence of the p-type device reduces fill factor, as extra space is required between p- and n-devices; it also removes the possibility of using the reset transistor as an overflow anti-blooming drain, which is a commonly-exploited benefit of the n-type reset FET. Another way to achieve hard reset, with the n-type FET, is to lower the voltage of VRST relative to the on-voltage of RST. This reduction may reduce headroom, or full-well charge capacity, but does not affect fill factor, unless VDD is then routed on a separate wire with its original voltage.

, but prevents image lag, sometimes a desirable tradeoff. One way to use hard reset is replace Mrst with a p-type transistor and invert the polarity of the RST signal. The presence of the p-type device reduces fill factor, as extra space is required between p- and n-devices; it also removes the possibility of using the reset transistor as an overflow anti-blooming drain, which is a commonly-exploited benefit of the n-type reset FET. Another way to achieve hard reset, with the n-type FET, is to lower the voltage of VRST relative to the on-voltage of RST. This reduction may reduce headroom, or full-well charge capacity, but does not affect fill factor, unless VDD is then routed on a separate wire with its original voltage.

Combinations of hard and soft reset

Techniques such as flushed reset, pseudo-flash reset, and hard-to-soft reset combine soft and hard reset. The details of these methods differ, but the basic idea is the same. First, a hard reset is done, eliminating image lag. Next, a soft reset is done, causing a low noise reset without adding any lag. Pseudo-flash reset requires separating VRST from VDD, while the other two techniques add more complicated column circuitry. Specifically, pseudo-flash reset and hard-to-soft reset both add transistors between the pixel power supplies and the actual VDD. The result is lower headroom, without affecting fill factor.

Active reset

A more radical pixel design is the active-reset pixel. Active reset can result in much lower noise levels. The tradeoff is a complicated reset scheme, as well as either a much larger pixel or extra column-level circuitry.

References

- ^ Alexander G. Dickinson et al., "Active pixel sensor and imaging system having differential mode", US 5631704

- ^ Zimmermann, Horst (2000). Integrated Silicon Optoelectronics. Springer. ISBN 3540666621.

- ^ Lawrence T. Clark, Mark A. Beiley, Eric J. Hoffman, "Sensor cell having a soft saturation circuit"US 6133563 [1]

- ^ Kazuya Matsumoto et al., "A new MOS phototransistor operating in a non-destructive readout mode" Jpn. J. Appl. Phys. 24 (1985) L323

- ^ a b Eric R. Fossum (1993), "Active Pixel Sensors: Are CCD's Dinosaurs?" Proc. SPIE Vol. 1900, p. 2–14, Charge-Coupled Devices and Solid State Optical Sensors III, Morley M. Blouke; Ed.

- ^ Peter J. W. Noble (Apr. 1968). Self-Scanned Silicon Image Detector Arrays. ED-15. IEEE. pp. 202–209.

- ^ Savvas G. Chamberlain (December 1969). "Photosensitivity and Scanning of Silicon Image Detector Arrays". IEEE Journal of Solid-State Circuits SC-4 (6): 333–342.

- ^ P. K. Weimer, W. S. Pike, G. Sadasiv, F. V. Shallcross, and L. Meray-Horvath (March 1969). "Multielement Self-Scanned Mosaic Sensors". IEEE Spectrum 6 (3): 52–65. doi:10.1109/MSPEC.1969.5214004.

- ^ R. Dyck and G. Weckler (1968). "Integrated arrays of silicon photodetectors for image sensing". IEEE Trans. Electron Devices ED-15 (4): 196–201. http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=1475068&isnumber=31645.

- ^ Richard F. Lyon (1981). "The Optical Mouse, and an Architectural Methodology for Smart Digital Sensors". In H. T. Kung, R. Sproull, and G. Steele. CMU Conference on VLSI Structures and Computations. Pittsburgh: Computer Science Press.

- ^ D. Renshaw, P. B. Denyer, G. Wang, and M. Lu (1990). "ASIC image sensors". IEEE International Symposium on Circuits and Systems 1990.

- ^ M. A. Mahowald and C. Mead (12 May 1989). "The Silicon Retina". Scientific American 264 (5): 76–82. doi:10.1038/scientificamerican0591-76. PMID 2052936.

- ^ D. Passeri et al. (May 2010). "Characterization of CMOS Active Pixel Sensors for particle detection: Beam test of the four-sensors RAPS03 stacked system". Nuclear Instruments and Methods in Physical Research A 617 (1–3): 573–375. Bibcode 2010NIMPA.617..573P. doi:10.1016/j.nima.2009.10.067. http://meroli.web.cern.ch/meroli/CharacterizationofCMOSActivePixelSensors.html.

- ^ F. Taghibakhsh and k. S. Karim (2007). "Two-Transistor Active Pixel Sensor for High Resolution Large Area Digital X-Ray Imaging". IEEE International Electron Devices Meeting: 1011–1014.

Further reading

- John L. Vampola (January 1993). "Chapter 5 - Readout electronics for infrared sensors". In David L. Shumaker. The Infrared and Electro-Optical Systems Handbook, Volume 3 - Electro-Optical Components. The International Society for Optical Engineering. ISBN 0-8194-1072-1. http://stinet.dtic.mil/stinet/XSLTServlet?ad=ADA364023. — one of the first books on CMOS imager array design

- Mary J. Hewitt; John L. Vampola, Stephen H. Black, and Carolyn J. Nielsen (June 1994). Eric R. Fossum. ed. "Infrared readout electronics: a historical perspective". Proceedings of SPIE (The International Society for Optical Engineering) 2226 (Infrared Readout Electronics II): pages 108–119. doi:10.1117/12.178474. http://link.aip.org/link/?PSISDG/2226/108/1.

- Mark D. Nelson; Jerris F. Johnson and Terrence S. Lomheim (November 1991). "General noise processes in hybrid infrared focal plane arrays". Optical Engineering (The International Society for Optical Engineering) 30 (11): 1682–1700. doi:10.1117/12.55996. http://link.aip.org/link/?OPEGAR/30/1682/1.

- Martin Vasey (September 2009). "CMOS Image Sensor Testing: An Integrated Approach". Jova Solutions. San Francisco, CA. http://www.jovasolutions.com/isl-white-paper/82-isl-3200-white-paper.

External links

- Active Pixel Sensors (APS) for the detection of ionizing particles Use of CMOS Active Pixel Sensors in high energy physic experiments